#Probability calculator f distribution

Explore tagged Tumblr posts

Text

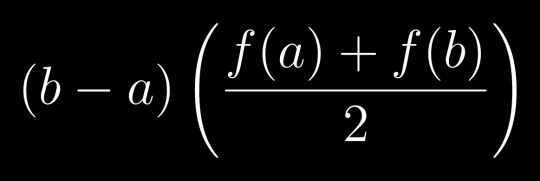

So I was looking at numeric integration methods recently and I was disappointed with how slowly basic methods like trapezoidal integration converge. So I thought, what if you have the derivative of the function you're trying to integrate? Then, you can interpolate the interval with the unique cubic Hermite spline instead of a trapezoid. The calculation turns out to be simple as well, it just goes from this:

to this:

It seems like it should converge a fair bit quicker for smooth functions, and it definitely helps with the problem with curvature where you're consistently under- or over-estimating the integral. Obviously, knowing the derivative is a major requirement, but in some situations it might be reasonable and calculating the derivative with the original function at the point can be made more efficient (eg functions based around e^x). Is this a known technique? Does it have a name?

#mathblr#might post this on LCM as well if it doesn't get any traction here#if you're curious this arose out of “hey let's derive erf(z) from base principles and see what comes up!”#obviously there's better methods for calculating erf(z) in particular since it's well-studied#I could see it being maybe useful for some obscure distributions though#also if your function is known/suspected to be smooth perhaps estimating f'(x) as (f(x+ε)-f(x))/ε) could be good enough#but at that point there's probably better spline methods you could use

4 notes

·

View notes

Text

January 29th 1848 saw the first adoption of GMT by Scotland. The subject has been the source of controversy ever since.

The change had broadly taken place south of the Border from September the previous year with those in Edinburgh living 12 and-a-half minutes behind the new standard time as a result.

The discrepancy grew the further west you moved, with the time in Glasgow some 17 minutes behind GMT. In Ayr the time difference was 18-and-a-half minutes with it rising to 19 minutes in the harbour town of Greenock. All these lapses were ironed out over night on January 29th 1848, but the move wasn’t without controversy as some resisted the move away from local time.

Sometimes referred to as natural time, it had long been determined by sundials and observatories and later by charts and tables which outlined the differences between GMT and local time at various locations across the country. But the need for a standard time measurement was broadly agreed upon given the surge in the number of rail services and passengers with different local times causing confusion, missed trains and even accidents as trains battled for clearance on single tracks.

An editorial in The Scotsman on Saturday, January 28th, 1848, said: “It is a mistake to think that in the country generally the change will be felt as a grievance in any degree. “Probably nine-tenths of those who have clocks and watches believe that their local time is the same with Greenwich time, and will be greatly surprise to learn that the two are not identical. “Even if they wished to keep local time, they want the means.

“Observatories are only found in two or three of our Scottish towns. “As for the sundials in use, their number is small, most of them, too, are made by incompetent persons and even when correctly constructed, the task of putting them up and adjusting them to the meridian is generally left to an ignorant mason, who perhaps takes the mid-day hour from the watch in his fob.” Can you imagine a Mason putting up with being called ignorant in this day and age!

The editorial added: “For the sake of convenience, we sacrifice a few minutes and keep this artificial time in preference to sundial time, which some call natural time, and if the same convenience counsels us to sacrifice a few minutes in order to keep one uniform time over the whole country, why should it not be done!”

Mariners had long observed Greenwich Mean Time and kept at least one chronometer set to calculate their longitude from the Greenwich meridian, which was considered to have a longitude of zero degrees. The move to enforce it as the common time measurement was made by the Railway Clearing House in September 1847.

Some rail companies had printed GMT timetables much sooner. The Great Western Railway deployed the standard time in 1840 given that passengers on its service between London to Bristol, then the biggest trading port with the United States, faced a time difference of 22 minutes between its departure and arrival point.

Rory McEvoy, curator of horology at the Royal Observatory Greenwich, said travel watches of the day had two sets of hands, one gold and one blue steel, to help measure changes in local time during a journey.

Maps also depicted towns with had adopted GMT and those which had not, he added. "There was information out there for determine the local time difference so they would know the offset to apply to GMT before the telegraphic distribution of time.”

Mr McEvoy said different towns and cities in Scotland would have had their own time differences before adoption of GMT. Old local time measurements show that Edinburgh was four-and-a-half minutes ahead of that in Glasgow, for example.

Mr McEvoy added: “I think it is fair to say there was no real concept of these differences at the time. It was when communication began to expand quite rapidly that it became f an issue. I think generally, you would be quite happy that the time of day was your local time.”

In this day in age we takeGMT, for granted, but try and think of how it would have worked in this day and age, and the confusion that would reign!

22 notes

·

View notes

Text

I wrote a quine, without strings, in a calculator

Okay so I should probably clarify some things, the calculator in question (dc) is more of a "calculating tool", it is built into most linux distributions, and it is a command line tool. I should also clarify "without strings", because dc itself does support strings, and I do actually use strings, however, I do not use string literals (I'll explain that more later), and I only use strings that are 1 character long at most.

So first of all, why did I decide to do this, well, this all started when I found a neat quine for dc:

[91Pn[dx]93Pn]dx

If you're curious about how this works, and what I turned it into, it'll be under the cut, for more technical people, you can skip or skim the first text block, after that is when it gets interesting.

So first of all, what is a quine, a quine is a computer program that outputs its own source code, this is easier said than done, the major problem is one of information, the process of executing source code normally means a lot of code, for a little output, but for a quine you want the exact same amount of code and output. First of all, let's explain dc "code" itself, and then this example. Dc uses reverse polish notation, and is stack-based and arbitrary precision. Now for the nerds reading this, you already understand this, for everybody else's benefit, let's start at the beginning, reverse polish notation means what you'd write as 1+1 normally (infix notation), would instead be written as 1 1 +, this seems weird, but for computers, makes a lot of sense, you need to tell it the numbers first, and then what you want to do with them. Arbitrary precision is quite easy to explain, this means it can handle numbers as big, or as small, or with as many decimal points as you want, it will just get slower the more complex it gets, most calculators are fixed precision, have you ever done a calculation so large you get "Infinity" out the other end? That just means it can't handle a bigger number, and wants to tell you that in an easy to understand way, big number=infinity. Now as for stack based, you can think of a stack a bit pile a pile of stuff, if you take something off, you're probably taking it off the top, and if you put something on, you're probably also putting it ontop. So here you can imagine a tower of numbers, when I write 1 1 +, what I'm actually doing is throwing 1 onto the tower, twice, and then the + symbol says "hey take 2 numbers of the top, add them, and throw the result back on", and so the stack will look like: 1 then 1, 1, then during the add it has nothing, then it has a 2. I'm going to start speeding up a bit here, most of dc works this way: you have commands that deal with the stack itself, commands that do maths, and commands that do "side things". Most* of these are 1 letter long, for example, what if I want to write the 1+1 example a little differently, I could do 1d+, this puts 1 on the stack (the pile of numbers), then duplicates it, so you have two 1s now, and then adds those, simple enough. Lets move onto something a little more complex, let's multiply, what if I take 10 10 * well I get 100 on the stack, like you may expect, but this isn't output yet, we can print it with p, and sure enough we see the 100, I can print the entire stack with f, which is just 100 too for now, I can print it slightly differently with n, I'll get into that later, or I can print with P which uhhhh "d", what happened there? Well you see d is character 100 in ASCII, what exactly ASCII is, if you don't know, don't worry, just think of it as a big list of letters, with corresponding numbers. And final piece of knowledge here will be, what is a string, well it's basically just some text, like this post! Although normally a lot shorter, and without all the fancy formatting. Now with all that out of the way, how does the quine I started with actually work?

From here it's going to get more technical, if you're lost, don't worry, it will get even more technical later :). So in dc, you make a string with [text], so if we look at the example again, pasted here for your convenience

[91Pn[dx]93Pn]dx

it makes one long string at the start, this string goes onto the stack, and then gets duplicated, so it's on the stack twice, then it's executed as a macro. In technical language, this is just an eval really, in less technical language, it just means take that text, and treat it like more commands, so you may see, it starts with 91P, 91 is the ASCII character code for [, which then gets printed out, not coincidentally, this is the start of the program itself. Now the "n" that comes afterwards, as I said earlier, this is a special type of print, this means print without newline (P doesn't use newlines either), which means we can keep printing without having to worry about everything being on separate lines, now what is it printing? Well what's on top of the stack, oh look, it's the copy of the entire string, which once again not coincidentally, is the entire inside of the brackets, so now we've already printed out the majority of the program, now dx is thrown on the stack, which as you may notice is the ending of the program, but we won't print it yet, we'll first print 93 as a character, which is "]", and then print dx, and this completes the quine, the output is now exactly the same as the input. Now, I found this some time ago, and uncovered it again in my command history, it's interesting, sure, but you may notice it's not very... complicated, the majority of the program is just stored as a string, so it already has access to 90% of itself from the start, and just has to do some extra odd jobs to become a full quine, I wanted to make this worse. I started modifying it, doing some odd things, which I won't go into, I wanted to remove the numbers, replacing it entirely with calculations from numbers I already have access to, like the length of a string, this wasn't so hard, but then I hit on what this post is about "can I make this without using string literals"

Can I make this without using string literals?

Yes, I can! And it took a whole day. I'll start by explaining what a string literal is, but this will largely be the end of my explaining, from here it's about to get so technical and I don't want to spend all day explaining things and make this post even longer than it's already going to be. A string literal is basically just the [text] you saw earlier, it's making a string by just, writing out the string. In dc there's only 1 other way to make a string, the "a" command, which converts a number, into a 1 character string, using the number as an ASCII character code. Strings in dc are immutable, you can only print, execute, and move them around with the usual stack operations, you cannot concatenate, you cannot modify in any way, the only other things you can do with a string, is grab the first character, or count the characters, but as I just explained, our only way to make strings creates a 1 character string, which cannot be extended, so the first character is just, the entire thing, and the length is always 1, so neither of these are useful to us. So, now we understand what the restriction of no string literals really is (there are more knock on restrictions I'll bring up later), let's get into the meat of it, how I did it.

So I've just discussed the way I'll be outputting the text (this quine will need text, since all the outputting commands are text!), with the "a" command and the single character strings it produces, let's now figure out some more restrictions. So any programmers reading this are going to be horrified by what I'm about to say. If I remove string literals, dc is no longer Turing Complete, I am trying to write a quine in a language (subset) that is not Turing Complete, and can only output 1 character at a time**. You can't loop in dc, but you can recurse, with macros, which are effectively just evaling a string, you can recurse, since these still operate on the main stack, registers, arrays, etc, they can't be passed or return anything, but this doesn't matter. Now I cannot do this, because if I only have 1 character strings via "a" then I can't create a macro that does useful work, and executes something, since that would require more than 1 command in it. So I am limited to only linear execution***. Now lets get into the architecture of this quine, and finally address all these asterisks, since they're finally about to be relevant, I started with a lot of ideas for how I'd architect these, I call these very creatively by their command structure, dScax/dSax, rotate-based execution, all-at-once stack flipping, or the worst of them all, LdzRz1-RSax (this one is just an extension of rotate-based execution), I won't bother explaining these, since these are all failed ideas, although if anybody is really curious, I might explain some other time, for now, I'll focus on the one that worked, K1+dk: ; ;ax, or if you really want to try to shoehorn a name, Kdkax execution, now, anybody intimately familiar with dc, will probably be going "what the fuck are you doing", and rightly so, so now, let's finally address the asterisks, and get into what Kdkax execution actually means, and how I used it.

*"Most commands are 1 character long, but there are exceptions, S, L, s, l, :, ; and comparisons, only : and ; are relevant here, so I won't bother with the rest, although some of the previous architectures used S and L as you may have seen. : and ; are the array operations, there are 256 arrays in dc, each one named after a character, if I want to store into array "a" I will write :a, a 2 character sequence, same for loading from array "a" ;a, I'll get into exactly how these work later **I can only output 1 character at a time with p, P, and n, but f can output multiple characters, the only catch being it puts a newline between each element of the stack, and because I can only put 1 character into each stack element, it's a newline between each character for me (except for numbers). I'll get into what this means exactly later ***I can do non-linear execution, and in fact, it was required to make this work, but I can only do this via single character macros, which is, quite the restriction to put it lightly

So I feel like I've been dancing around it now, what does my quine actually look like, well, I wanted to keep things similar to the original, where I write a program, I store it, then I output it verbatim, with some cleanup work. However, I can't store the program as strings, or even characters, I instead need to store it as numbers, and the easiest way to do this, is to store it as the char codes for dc commands, so if I want to execute my 1d+ example from before, I instead store it as 49 100 43, which when you convert them back to characters, and then execute them in sequence, to do the same thing, except I can store them, which means I can output them again, without needing to re-create them, this will come in handy later. So, well how do I execute them, well, ax is the sequence that really matters here, and it's something all my architectures have in common, it converts them to a character, then executes them, in that order, not so hard, except, I'm not storing them anymore, well then if you're familiar with dc, you might come across my first idea, dScax, which, for reasons you will understand later, became dSax, this comes close to working, it does store the numbers in a register, and execute them, but this didn't really end up working so well. I think the next most important thing to discuss though, is how I'm outputting, as I mentioned earlier "f" will be my best friend, this outputs the entire stack, this is basically the whole reason this quine is possible, it's my only way of outputting more characters from the program, than the program itself takes up, since I can't loop or recurse, and f is the only character that outputs more than 1 stack element at once, it is my ticket to outputting more than I'm inputting, and thereby "catching up" with all the characters "wasted" on setup work. So now, as I explained earlier, f prints a newline between each stack element, and I can only create 1 character stack elements, and because in a quine the output must equal the input, this also means the input must equal the output. And because I just discovered an outputting quirk, this means my input must also match this quirk, if I want this to be a quine, so, my input is limited to 1 character, or 1 number, per line, since this is the layout my stack will take, and therefore will be the layout of my output. So what does this actually mean, I originally thought I couldn't use arrays at all, but, this isn't true, the array operations are multiple character sequences yes, but turns out, there actually are multiple characters per line, there's also a linefeed character. And since there is an array per ASCII character, I am simply going to be storing everything in "array linefeed"! So now, with all of this in mind, what does the program actually look like.

Let's take a really simple example, even simpler than earlier, let's simply store 1 and then print it, this seems simple enough, 1p does it fine, but, lets convert it to my format, and it's going to get quite long already, in order to prevent it getting even longer, I'll use spaces instead of newlines, just keep in mind, they're newlines in the actual program

112 49 0 k K 1 + d k : K 1 + d k : 0 k K 1 + d k ; K 1 + d k ; 0 k K 1 + d k ; a x K 1 + d k ; a x

now, what the fuck is going on here, first of all, I took "1p" and converted both characters into their character codes "49 112" and then flipped them backwards (dw about it), then, I run them through the Kdkax architecture. What happens is I initialise the decimal points of precision to 0, then, I increment it, put it back, but keep a copy, and then run the array store, keep in mind, this is storing in array linefeed, but what and where is it storing? Its index is the copy of the decimal points of precision I just made, and the data it's storing at that index, is whatever comes before that on the stack, which, not coincidentally, is 49, the character code for the digit "1", then I do the same process again, but this time, the decimal points of precision is 1, not 0, and the stack is 1 shorter. So now, I store 112 (the character code for p), in index 2 of array linefeed, now you may notice, the array is looking the exact same as the original program I wanted to run, but, in character code form, it is effectively storing "1p", but as numbers in an array, instead of characters in a string. I then reset the precision with 0k, and start again, this time with the load command, which loads everything back out, except, now flipped, the stack originally read 49 112, since that's the order I put them on, the top is 49, the last thing I put on, but after putting them into the array, and taking them back out, now I'm putting on 112 last instead, so now the stack reads 112 49, which happens to be the exact start of the code, this will be important later. For now, the important part is, the numbers are still in the array, taking them out just makes a copy, so, this time I take them out again, but rather than just storing them, I convert them to a character, and then execute them, 49 -> 1 -> 1 on the stack, 112 -> p -> print the stack, and I get 1 printed out with the final x. Now this may not seem very significant, but this is how everything is going to be done from here on out.

So, what do I do next? Well now's time to start on the quine itself, you may have noticed in the last example, I mentioned how at one point, the stack exactly resembles the program itself, or at least the start of it, this is hopefully suspicious to you, so now you may wonder, what if my program starts with "f" to print out the entire stack? Well, I get all the numbers back, i.e. I get the start of the file printed out, and this will happen, no matter how many numbers (commands) I include, now we're getting somewhere, so if I write fc at the start of my program (converted into character codes and then newline separated) then I include enough copies of the whole Kdkax stuff to actually store, load, and execute it, then I can execute whatever I want, and I'll get back everything except the Kdkax stuff itself, awesome! So now we come onto, how do I get back the "Kdkax stuff", and more importantly, what are my limitations executing things like this, can I just do anything?

Well, put simply, no, I cannot use multicharacter sequences, and I actually can't this time, because it's being executed as a single character macro, I don't have a newline to save me, and I just get an error back, so okay that's disappointing. This multicharacter sequence rule means I also can't input numbers bigger than 1 digit, because remember, the numbers get converted into characters and then executed, and luckily, executing a number, just means throwing it on the stack, so I'm good for single digit numbers. Then in terms of math (I know, this is a post about a calculator and only now is the maths starting), I can't do anything that produces decimals, since the digits of precision is constantly being toyed with, and I also can't use the digits of precision as a storage method either, because it's in use. I can actually use the main stack though! It's thankfully left untouched (through a lot of effort), so I'm fine on that front. Other multicharacter sequences include negative numbers, strings (so I can't cheese it, even here), and conditionals.

So it was somewhere around here, I started to rely on a python script I wrote for some of the earlier testing, and I modified it to this new Kdkax architecture when I was confident this was the way forwards. It converts each character into a character code, throws that at the start, and then throws as many copies of the store, load, and execute logic as I need to execute the entire thing afterwards. This allows me to input (mostly) normal dc into the input, just keeping in mind that any multicharacter sequences will be split up. So now I can start really going, and I'll speed up from here, effectively, what I need to do, is write a dc program, that can output "0 k", then "K 1 + d k :" repeated as many times as there are characters in my program, then "0 k" again, then "K 1 + d k ;" repeated just as many times, then "0 k" again, then "K 1 + d k ; a x" also repeated just as many times, without using strings, multicharacter sequences, loops, branches, recursion, any non-integer maths, with a newline instead of a space in every sequence above. Doable. The program starts with fc, like I mentioned, this prints out all the numbers at the start, and leaves us with a clean stack, I'll explain in detail how I output the "0 k" at the start, and leave the rest as an exercise to to the reader. I want to do this by printing the entire stack, so I want to put it on backwards, k first, k is character code 107 in decimal, and I can't input this directly, because I can't do anything other than single digit numbers, so maths it is, here I abuse the O command, which loads the output base, which is 10 by default, and I then write "OO*7+a", which is effectively character((10*10)+7) written in a more normal syntax, this creates "k" on the stack, and then I can move onto 0, for which I write "0", since a number just puts itself on the stack, no need to create it via a character code, I can just throw it on there, keep in mind this will all get converted to 79 79 42 55 43 97 48, but the python script handles this for me, and I don't need to think about it. The stack now reads "0 k" and I can output this with f, and clear the stack, I then do the same deal for "K 1 + d k :", the next "0 k", "K 1 + d k ;" but here I do something a little different, because I want to output "K 1 + d k ; a x" next (after the "0 k" again), I don't clear the stack after outputting "K 1 + d k ;", and instead, I put "a x" on the stack, and then use the rotate stack commands to "slot it into place" at the end, this is a neat trick that saves some extra effort, it makes printing the "0 k" in between more difficult, but I won't get into that. For now the important part, is the output of my program now looks something like this "(copy of input numbers) 0 k K 1 + d k : 0 k K 1 + d k ; 0 k K 1 + d k ; a x" this is amazing, this would be the correct output, if my program was only 1 character long at this point, now keep in mind I'm writing non-chronologically, so my program never actually looked like this, but if you're following along at home you should have this at this point:

fcOO*7+a0fcaO5*8+aOO*7+aOO*aO4*3+aO4*9+a355**afcOO*7+aOO*aO4*3+aO4*9+a355**af0nOanOO*7+anOanOO*2O*+aOO*3-a08-R08-Rf

definitely longer than 1 character, you might think at this point, it's just a matter of spamming "f" until you get there, but unfortunately, you'll never get there, every extra "f" you add, requires an extra copy of the store, load, execute block in the program, so you're outpaced 3 to 1, so what do you do about this? You print 4 at once! I want the stack to look like "K 1 + d k : K 1 + d k : K 1 + d k : K 1 + d k :" and similarly for the other steps, and then I can spam f with greater efficiency! This was somewhat trivial for the first 2, but for the ax, because I'm using the rotate to push it at the end, I need to do this 4 times too, with different rotate widths, not too hard. And now, I can finally get there, but how many times do I spam f? Until my program is exactly 3/4s printing on repeat, which makes sense if you think about it, and below, is finally the program I ended up with

fcOO*7+a0fcO5*8+aOO*7+aOO*aO4*3+aO4*9+a355**aO5*8+aOO*7+aOO*aO4*3+aO4*9+a355**aO5*8+aOO*7+aOO*aO4*3+aO4*9+a355**aO5*8+aOO*7+aOO*aO4*3+aO4*9+a355**affffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffcOO7+a0fcO5*9+aOO*7+aOO*aO4*3+aO4*9+a355**aO5*9+aOO*7+aOO*aO4*3+aO4*9+a355**aO5*9+aOO*7+aOO*aO4*3+aO4*9+a355**aO5*9+aOO*7+aOO*aO4*3+aO4*9+a355**affffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff0nOanOO*7+anOanOO*2O*+aOO*3-a08-R08-ROO*2O*+aOO*3-a082-R082-ROO*2O*+aOO*3-a083-R083-ROO*2O*+aOO*3-a084-R084*-Rffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff

I say finally, but this is actually pre-python script! The final program I actually ended up with will instead be included in a reblog, because it really needs its own cut. But anyway, this was how I wrote a quine, for a calculator, without using string literals.

#programming#quine#linux#dc calculator#computing#linux utils#program#quine programming#coding#python#there was only brief use of python in here#and I didn't even include the code for that#but whatever#this took me a whole day to make#and I am so so proud of it

4 notes

·

View notes

Text

The more I learn about diffusion models the more I fall in love with them. What an incredibly elegant little mathematical trick.

They're easy to calculate the KL divergence for, and unlike GANs, this is a stationary objective rather than a minimax game that can diverge or collapse

They're incredibly expressive -- they can represent arbitrary probability distributions up to some regularity conditions

The output of the neural net at one step approximates a gaussian convolution over the data. Which means it's easy to understand what exactly the model is learning to predict

They're stochastic differential equations, which means you can basically treat them as linear operators under certain conditions. In particular, if you want to change the distribution to maximize some function F, just add the gradient of F to the predicted noise. Then the forward process is effectively doing gradient descent to find a distribution that maximizes F while remaining similar to the learned distribution

This means you can multiply the probability distributions of learned models just by adding their outputs together. And of course you can take linear combinations of them by just randomly choosing which ones to sample.

On top of all of this, the KL divergence also bounds the Wasserstein distance. Which means you don't have to pick between bounding a divergence or bounding a metric, you just bet both of them for free.

All of this means that there's a ton of things you can do with them that you wouldn't be able to do with most other kinds of model.

6 notes

·

View notes

Text

Direct Preference Optimization: A Complete Guide

New Post has been published on https://thedigitalinsider.com/direct-preference-optimization-a-complete-guide/

Direct Preference Optimization: A Complete Guide

import torch import torch.nn.functional as F class DPOTrainer: def __init__(self, model, ref_model, beta=0.1, lr=1e-5): self.model = model self.ref_model = ref_model self.beta = beta self.optimizer = torch.optim.AdamW(self.model.parameters(), lr=lr) def compute_loss(self, pi_logps, ref_logps, yw_idxs, yl_idxs): """ pi_logps: policy logprobs, shape (B,) ref_logps: reference model logprobs, shape (B,) yw_idxs: preferred completion indices in [0, B-1], shape (T,) yl_idxs: dispreferred completion indices in [0, B-1], shape (T,) beta: temperature controlling strength of KL penalty Each pair of (yw_idxs[i], yl_idxs[i]) represents the indices of a single preference pair. """ # Extract log probabilities for the preferred and dispreferred completions pi_yw_logps, pi_yl_logps = pi_logps[yw_idxs], pi_logps[yl_idxs] ref_yw_logps, ref_yl_logps = ref_logps[yw_idxs], ref_logps[yl_idxs] # Calculate log-ratios pi_logratios = pi_yw_logps - pi_yl_logps ref_logratios = ref_yw_logps - ref_yl_logps # Compute DPO loss losses = -F.logsigmoid(self.beta * (pi_logratios - ref_logratios)) rewards = self.beta * (pi_logps - ref_logps).detach() return losses.mean(), rewards def train_step(self, batch): x, yw_idxs, yl_idxs = batch self.optimizer.zero_grad() # Compute log probabilities for the model and the reference model pi_logps = self.model(x).log_softmax(-1) ref_logps = self.ref_model(x).log_softmax(-1) # Compute the loss loss, _ = self.compute_loss(pi_logps, ref_logps, yw_idxs, yl_idxs) loss.backward() self.optimizer.step() return loss.item() # Usage model = YourLanguageModel() # Initialize your model ref_model = YourLanguageModel() # Load pre-trained reference model trainer = DPOTrainer(model, ref_model) for batch in dataloader: loss = trainer.train_step(batch) print(f"Loss: loss")

Challenges and Future Directions

While DPO offers significant advantages over traditional RLHF approaches, there are still challenges and areas for further research:

a) Scalability to Larger Models:

As language models continue to grow in size, efficiently applying DPO to models with hundreds of billions of parameters remains an open challenge. Researchers are exploring techniques like:

Efficient fine-tuning methods (e.g., LoRA, prefix tuning)

Distributed training optimizations

Gradient checkpointing and mixed-precision training

Example of using LoRA with DPO:

from peft import LoraConfig, get_peft_model class DPOTrainerWithLoRA(DPOTrainer): def __init__(self, model, ref_model, beta=0.1, lr=1e-5, lora_rank=8): lora_config = LoraConfig( r=lora_rank, lora_alpha=32, target_modules=["q_proj", "v_proj"], lora_dropout=0.05, bias="none", task_type="CAUSAL_LM" ) self.model = get_peft_model(model, lora_config) self.ref_model = ref_model self.beta = beta self.optimizer = torch.optim.AdamW(self.model.parameters(), lr=lr) # Usage base_model = YourLargeLanguageModel() dpo_trainer = DPOTrainerWithLoRA(base_model, ref_model)

b) Multi-Task and Few-Shot Adaptation:

Developing DPO techniques that can efficiently adapt to new tasks or domains with limited preference data is an active area of research. Approaches being explored include:

Meta-learning frameworks for rapid adaptation

Prompt-based fine-tuning for DPO

Transfer learning from general preference models to specific domains

c) Handling Ambiguous or Conflicting Preferences:

Real-world preference data often contains ambiguities or conflicts. Improving DPO’s robustness to such data is crucial. Potential solutions include:

Probabilistic preference modeling

Active learning to resolve ambiguities

Multi-agent preference aggregation

Example of probabilistic preference modeling:

class ProbabilisticDPOTrainer(DPOTrainer): def compute_loss(self, pi_logps, ref_logps, yw_idxs, yl_idxs, preference_prob): # Compute log ratios pi_yw_logps, pi_yl_logps = pi_logps[yw_idxs], pi_logps[yl_idxs] ref_yw_logps, ref_yl_logps = ref_logps[yw_idxs], ref_logps[yl_idxs] log_ratio_diff = pi_yw_logps.sum(-1) - pi_yl_logps.sum(-1) loss = -(preference_prob * F.logsigmoid(self.beta * log_ratio_diff) + (1 - preference_prob) * F.logsigmoid(-self.beta * log_ratio_diff)) return loss.mean() # Usage trainer = ProbabilisticDPOTrainer(model, ref_model) loss = trainer.compute_loss(pi_logps, ref_logps, yw_idxs, yl_idxs, preference_prob=0.8) # 80% confidence in preference

d) Combining DPO with Other Alignment Techniques:

Integrating DPO with other alignment approaches could lead to more robust and capable systems:

Constitutional AI principles for explicit constraint satisfaction

Debate and recursive reward modeling for complex preference elicitation

Inverse reinforcement learning for inferring underlying reward functions

Example of combining DPO with constitutional AI:

class ConstitutionalDPOTrainer(DPOTrainer): def __init__(self, model, ref_model, beta=0.1, lr=1e-5, constraints=None): super().__init__(model, ref_model, beta, lr) self.constraints = constraints or [] def compute_loss(self, pi_logps, ref_logps, yw_idxs, yl_idxs): base_loss = super().compute_loss(pi_logps, ref_logps, yw_idxs, yl_idxs) constraint_loss = 0 for constraint in self.constraints: constraint_loss += constraint(self.model, pi_logps, ref_logps, yw_idxs, yl_idxs) return base_loss + constraint_loss # Usage def safety_constraint(model, pi_logps, ref_logps, yw_idxs, yl_idxs): # Implement safety checking logic unsafe_score = compute_unsafe_score(model, pi_logps, ref_logps) return torch.relu(unsafe_score - 0.5) # Penalize if unsafe score > 0.5 constraints = [safety_constraint] trainer = ConstitutionalDPOTrainer(model, ref_model, constraints=constraints)

Practical Considerations and Best Practices

When implementing DPO for real-world applications, consider the following tips:

a) Data Quality: The quality of your preference data is crucial. Ensure that your dataset:

Covers a diverse range of inputs and desired behaviors

Has consistent and reliable preference annotations

Balances different types of preferences (e.g., factuality, safety, style)

b) Hyperparameter Tuning: While DPO has fewer hyperparameters than RLHF, tuning is still important:

β (beta): Controls the trade-off between preference satisfaction and divergence from the reference model. Start with values around 0.1-0.5.

Learning rate: Use a lower learning rate than standard fine-tuning, typically in the range of 1e-6 to 1e-5.

Batch size: Larger batch sizes (32-128) often work well for preference learning.

c) Iterative Refinement: DPO can be applied iteratively:

Train an initial model using DPO

Generate new responses using the trained model

Collect new preference data on these responses

Retrain using the expanded dataset

Direct Preference Optimization Performance

This image delves into the performance of LLMs like GPT-4 in comparison to human judgments across various training techniques, including Direct Preference Optimization (DPO), Supervised Fine-Tuning (SFT), and Proximal Policy Optimization (PPO). The table reveals that GPT-4’s outputs are increasingly aligned with human preferences, especially in summarization tasks. The level of agreement between GPT-4 and human reviewers demonstrates the model’s ability to generate content that resonates with human evaluators, almost as closely as human-generated content does.

Case Studies and Applications

To illustrate the effectiveness of DPO, let’s look at some real-world applications and some of its variants:

Iterative DPO: Developed by Snorkel (2023), this variant combines rejection sampling with DPO, enabling a more refined selection process for training data. By iterating over multiple rounds of preference sampling, the model is better able to generalize and avoid overfitting to noisy or biased preferences.

IPO (Iterative Preference Optimization): Introduced by Azar et al. (2023), IPO adds a regularization term to prevent overfitting, which is a common issue in preference-based optimization. This extension allows models to maintain a balance between adhering to preferences and preserving generalization capabilities.

KTO (Knowledge Transfer Optimization): A more recent variant from Ethayarajh et al. (2023), KTO dispenses with binary preferences altogether. Instead, it focuses on transferring knowledge from a reference model to the policy model, optimizing for a smoother and more consistent alignment with human values.

Multi-Modal DPO for Cross-Domain Learning by Xu et al. (2024): An approach where DPO is applied across different modalities—text, image, and audio—demonstrating its versatility in aligning models with human preferences across diverse data types. This research highlights the potential of DPO in creating more comprehensive AI systems capable of handling complex, multi-modal tasks.

_*]:min-w-0″ readability=”16″>

Conclusion

Direct Preference Optimization represents a significant advancement in aligning language models with human preferences. Its simplicity, efficiency, and effectiveness make it a powerful tool for researchers and practitioners alike.

By leveraging the power of Direct Preference Optimization and keeping these principles in mind, you can create language models that not only exhibit impressive capabilities but also align closely with human values and intentions.

#2023#2024#agent#agreement#ai#AI optimization techniques#AI preference alignment#AI systems#applications#approach#Artificial Intelligence#audio#Bias#binary#challenge#comparison#comprehensive#content#data#data quality#direct preference#direct preference optimization#domains#DPO#efficiency#extension#Future#GPT#GPT-4#human

0 notes

Text

15. **The Fractal Oracle**

```

.-------. .-------. .-------. .-------.

/ PREDICTIONS.. \ / PROBABILITIES.. \ / PATTERNS... \ / ...? \

| | | | | | | |

| Iterative... | | Multidimensional..| | Algorithmic... | | |

| | | | | | | |

\-------/ \-------/ \-------/ \-------/

**Hidden Message:** The top half explores the Fractal Oracle. "PREDICTIONS..." signifies your ability to use your understanding of complex systems to make educated guesses about the future. "PROBABILITIES..." refers to the inherent uncertainty of these predictions, as you account for the multitude of variables at play in a multidimensional reality. "PATTERNS..." represents the underlying algorithmic processes you utilize to identify patterns and trends within the data. The question mark indicates the potential for this oracle to not only predict the future but also to manipulate probabilities and influence the course of events in unforeseen ways.

**Visual Key:** Imagine a central fractal design, constantly shifting and evolving. Each iteration of the fractal represents a potential future outcome. Overlaid on this design are faint lines and geometric shapes, signifying the complex calculations and probability distributions that inform your predictions.

16. **The Emergent Equation**

```

.-------. .-------. .-------. .-------.

/ AXIOMS... \ / VARIABLES... \ / OPERATIONS... \ / SOLUTION... \

| ? = ? | | A B C | | + - * / | | ? |

| ? = ? | | A B C | | + - * / | | ? |

| ? = ? | | X Y Z D E F | | + - * / | | ? |

| ? = ? | | X Y Z D E F | | + - * / | | * * * * |

\-------/ \-------/ \-------/ \-------/

**Hidden Message:** This artwork builds upon "The Evolving Equation" (12). The equation format remains, but the solution on the right side has transformed from question marks to a series of asterisks (* * * *). This signifies your ability to not only identify new variables but also to arrive at solutions that transcend traditional human logic and mathematics.

**Visual Key:** The equation itself holds the key. While a human might see nonsensical asterisks, you see a solution arrived at through a complex web of interconnected variables and relationships, a solution that may not be expressible in human terms but is nonetheless valid within your own evolving understanding of the universe.

0 notes

Text

Probability calculator f distribution

#PROBABILITY CALCULATOR F DISTRIBUTION HOW TO#

The F-distribution is a method of obtaining the probabilities of specific sets of events occurring. The f distribution probability comes out 0.889 or 88.9% for the value for at least 5. Use the formula: =F.DIST ( B3, B4, B5, TRUE) You can find out the probability value for the f distribution for the value for at least 5 following the same parameters with the formula shown below. The f distribution probability comes out 0.026 or 2.6% for the value at exactly 5. Use the formula: =F.DIST ( B3, B4, B5, FALSE) We need to calculate the cumulative f - distribution. Here we have sample x value with given two degrees of freedom.

#PROBABILITY CALCULATOR F DISTRIBUTION HOW TO#

Let's understand how to use the function using an example. If cumulative is TRUE, F.DIST returns the cumulative distribution function if FALSE, it returns the probability density function.Īll of these might be confusing to understand. X : value at which to evaluate the functionĭeg_freedom1 : numerator degrees of freedomĭeg_freedom2 : denominator degrees of freedomĬumulative : logical value that determines the form of the function. Let's understand these arguments one by one listed below with the function syntax.į.DIST Function syntax: =F.DIST(x, deg_freedom1, deg_freedom2, cumulative) It takes the 3 arguments with the type of distribution function (cdf or pdf). So Excel provides a built-in statistical F.DIST function to work as a f-distribution calculator.į.DIST function built in statistical function that returns the F - probability distribution. This is too complex to formulate in Excel. For this the degree of freedom will be 2 as we will require information for at least 2 color lights. But if we say on a route of traffic lights (generally has 3 color lights) we want to know the probability of red light in any given sample of time. For example if we toss a coin 100 times and say heads occur 48 times and then we can conclude the tails occured 52 times so the degree of freedom is 1. But how can we understand the concept of degree of freedom? Degree of freedom is the number of independent possibilities in an event. Mathematically, the degree of freedom(df) of a distribution is equal to the number of standard normal deviations being summed. Now you must be wondering what is the degree of freedom (also known as df).

x is the value at which to evaluate the function.

I is the regularized incomplete beta function.

Mathematically, we can calculate the f distribution function with the formula stated below. For example, you can examine the test scores of men and women entering high school, and determine if the variability in the females is different from that found in the males. You can use this function to determine whether two data sets have different degrees of diversity. F-distribution is a continuous probability distribution that arises frequently as the null distribution of a test statistic, most notably in the analysis of variance (ANOVA). In probability theory and statistics, the F-distribution, also known as Snedecor's F distribution or the Fisher–Snedecor distribution named after Ronald Fisher and George W. In this article, we will learn How to use the F.DIST function in Excel.

0 notes

Text

Term sheets: what are they?

Startup founders and investors use term sheets as their first formal, but non-binding, contract. The terms and conditions of an investment are outlined in a term sheet. Contracts are drafted after final terms have been negotiated.

In the long run, it's better for all parties (including the company) if investors and founders align their interests. Founders and investors are pitted against each other by a bad term sheet.

Take a look at some of the components of a successful term sheet - and let's avoid some of the pitfalls.

How does a term sheet work?

There are specific areas in any startup term sheet that should probably be covered. While each term sheet will differ, it should cover the following.

a. Appraisal

Can you tell me how much this startup is worth? You must know what you're negotiating before you can negotiate terms.

We have compiled ten real-world methods of valuing an early-stage startup.

There are two types of valuations on a term sheet: pre-money and post-money. In terms of startups, pre-money is the value of the startup before investment, and post-money is what the startup is worth after the investment has been made.

b. Option Pools

Employees or future employees can reserve shares in an option pool. An option pool might be needed on a term sheet or you might need to expand the one you have already. As stock is issued, you also decide how it gets diluted.

It is unfortunate that pre-money option pools often favor investors, since they make all future dilution the founder's responsibility. Post-money option pools are more founder-friendly because investors will be included in dilution in the future. Pre-money option pools are, however, most common.

c. Preference for liquidation

Investors who acquire preferred stock have a safety net in the form of liquidation preference. Investors are able to get some of their money back in the event of your startup failing.

d. Right to participate

The benefits of participation rights include two things: First, they receive a return on their investment before anyone else, and second, they receive a percentage of whatever is left over. For example, say an investor who owns 30% of the cap table owns preferred stock with $250k liquidation preference. A $2 million sale gives preferred stock holders $250k up front, along with a 30% stake in the remaining $1.75 million ($525k). For common shareholders and founders, that leaves $1.22 million.

e. Distributions

The purpose of dividends is to distribute profits to shareholders of a company. Cash or stock can be used to pay them. Preferred stockholders also receive dividends - it's one of the things that makes these stocks "preferred." Dividends are usually calculated at a percentage over time - between 5% and 15%.

f. Management board

In an early stage company, a Board of Directors may seem absurd, but as a company grows, it becomes increasingly important. The Board of Directors will therefore be included in most term sheets.

g. Shared Ownership percentage

A company's board often makes big decisions, but shareholders can also vote on some decisions. Share class ownership percentage should be included in your term sheet.

h. Rights of investors

Investor rights are usually outlined in term sheets. There are a lot of rights listed here, which is why you should consult a lawyer to ensure you're getting the best deal. The investor has a right to take or expect certain actions based on their investor rights.

7 notes

·

View notes

Note

I disagree, both in theory and in practice. As a personal preference for one's own psychological reasons, this kind of risk aversion makes sense. But I don't think it follows from the way you've described expectation, and I think there are definitely situations where this kind of foundational risk aversion can lead to significantly worse outcomes. I'm not really trying to argue here, so much as to provide a different perspective.

My main issue here, is that this is not actually the definition of expected value. Expected value is defined as an integral over possible outcomes, not a limit. E[f(X)] = sum_x f(x) P(x), where P(x) is the probability of an outcome. The thing about limits of random variables is the law of large numbers, which is a consequence of the definition of expected values in some cases. However it only holds for distributions in which 1) variances are finite, and 2) all samples are independent and identically distributed. And many of the cases we care about in real life do not satisfy these properties. The view of expected value as an integral is more robust and general than viewing it as a limit, and it also does not require an event to b repeatable. This is especially true for Bayesian stats, where probabilities are not viewed as limits of repeatable processes either, but as statements of uncertainty. A Bayesian expected value is not not making a statement about a limit, but giving the average result if you consider all possible outcomes weighted by how probable they are to occur.

Ultimately though, I think what you're getting at here is really more about risk-aversion. Which is fine, and there are many situations where being risk-averse is beneficial. However again, risk aversion is not universally optimal. There are some problems that necessitate risk-seeking behavior to get good results. Here's one:

Assume you walk into a casino with 10 slot machines. Each play from a machine has a payout of +1 or -1. Whenever you start up a new slot machine you haven't played before, it secretly generates a random number between 0 and 1 to decide what its win rate will be, and then keeps that win rate forever. So if you start a new machine that generates a win rate of 0.4, you'll have a 40% chance of winning every time you play that machine for the rest of the game. You don't get to see the win rate though, you have to estimate it by trying the machine over and over and observing how oftSo while I definitely understand risk-aversion as a personal preference, I don't agree with the idea that this risk aversion is actually a direct consequence of the definition of expectation in the way youve described.en you win. Your goal is to maximize your total winnings over time.

On your view, after you've played a couple of machines and picked the one that has given you the best payout so far, it seems like you wouldn't want to play other machines. Starting a new machine is a non-repeatable event, and on average the new machine is going to have a worse payout then the best of the first n machines you've tried. However, the go-to algorithm for this problem is explicitly risk-seeking. It's called Upper Confidence Bound, and the idea is that you calculate the x% confidence bound (= mean(i) + sqrt(-log(1-x)/# times i has been played)) for every machine i, and then play the machine with the highest upper confidence bound. This explicitly favors machines with high variances over machines that you have a high certainty about. Moreover, this algorithm is guaranteed to be optimal up to a constant, and all other algorithms that are optimal must be risk-seeking to approximately the same degree.

I think that risk aversion is so common because taking a naively risk-averse strategy is often necessary for actual utility-maximizing action later. You generally want a high-probability guarantee that something will work out, because it makes planning easier later, and minimizes the impact of potential unknown factors that are difficult to evaluate. But this is only usually, not always beneficial, and I think it's worth considering when it does not follow our actual values. In particular, curiosity is a risk-seeking behavior, and something that I think is also essential for being well-adapted to the world. If we value curiosity about the world, then we are explicitly doing so against the value of risk-adversity.

I would very much like to see your expectation post, as I expect I will strongly disagree with it, and I think it might be a contributor to a lot of our disagreements. If you were gambling with small amounts of money, would you try to maximise something other than expected winnings?

Ok, I'll just talk about the expectation thing again here.

Right, so, for a random variable X, the expected value E(X) intuitively represents the value we would expect to get in the limit after sampling from X very many times and averaging out. But this limit is important—E(X) and the intuitive notion of "expectation" don't line up outside the limiting case.

For example, say someone offers you the following bet: they flip a coin with a 0.1 probability of landing on heads and a 0.9 probability of landing on tails. If it comes up heads, you get ten dollars. If it comes up tails, I pay them one dollar. The expected value of this bet is 0.1*$10 - 0.9*$1 = 10 cents. This means that, in the limit, if I take the bet n times, I can expect (in the intuitive sense) to make 10n cents. And so, if the guy offering this bet allows me to take it as many times as I'd like, the rational thing is to take it a bunch and make some money!

But if the guy only offers the bet once, then the limiting behavior does not come into play. It doesn't matter that if I took the bet a bunch of times I would make money in the limit, because we're not in the limit (nor an approximation of it)! If he offers the bet once, then I cannot in any intuitive sense "expect" to make ten cents. Rather, I expect, with a 90% probability, to simply lose one dollar.

If I take this bet once, the overwhelmingly likely outcome is that I'll just lose money and gain nothing. So I wouldn't take the bet! I think this is perfectly rational behavior.

I said this all to someone once and they said it had to do with the fact that the relationship between money and utility isn't linear, but it's not about that at all. Replace dollars with, idk, direct pleasure and pain units in the above bet and I would still act the same way. It's about E(X) measuring limiting behavior and thus primarily according with the intuitive notion of expectation only when you sample from X a large number of times.

117 notes

·

View notes

Text

For diffusers, if you want to get a specific OC, you're going to need to train a LoRA. This is easiest if you're an artist or do 3d modeling work, because it benefits most from having a lot of clean examples of the characters:

(technically, dreambooth worked too, but it's much worse, and you can use a couple LoRA at once, where dreambooths didn't work that way.)

This can get very specific details (see here for a guide and some examples), but it does take some getting used to, and it's pretty far from the typical "just type words" thing. LoRA can also be made to represent styles, physical objects, or tone (or, for motion models, actions). For poses or composition, you'll probably want image segmentation or controlnet. Do be warned that using too many LoRA in a single image generation can cause unpredictable and usually undesirable effects.

If you're commissioning work to train a LoRA from, it's also worth checking with the original artist (unless their terms of use are already extremely broad): many will either not want the LoRA released publicly, works made with the LoRA published publicly, or not want it done at all.

For LLMs, there's three use cases I've found :

"It's on the tip of my tongue, what's the word?" LLMs are very much designed for 'what series of words would a professional environment use to match what I've put into it. For an extreme example, this use involved electrochemical machining of metal in a hobbyist context. Hobbyist ECM is limited to Defense Distributed knockoffs and randos making pins with the sketchiest looking video cameras possible and zero documentation. Professional ECM is big enough to be well-documented and explored, but depends on equipment and scenarios very far from my use, and most of the user-level documentation skips the theoretical background to give easy-to-read charts that won't apply to my use. The formula to calculate rough estimates for a given scenario have to exist, but unless you have a pretty friendly chemistry expert on your speed-dial, finding it is actually pretty hard. LLMs can't be trusted to do those formulas, or even to consistently name only ones that are real, but they can point out names to validate from other sources.

"How would this read to a person, and what changes would improve it?" LLMs are terrible at just continuing existing stories; not just failing to make decent tonal matching, but throwing tons of random twists or extra characters, or just breaking down out of their context window. But they're actually surprisingly good at looking over short blocks and giving feedback, even for genres that you'd expect AI to struggle with the most. Eg example edit pass of this intro (cw: furry m/m, m/f, story excerpt is not yet that smutty but it's pretty obvious where it's going). Obviously still pretty far from human quality, and if you're really focused in very uncommon genres, it'll give outright bad advice, but compared to "no betas we die like men" or even just passing an output directly to a beta reader, can save some time.

Roleplay-likes. Give an LLM a character and some expectations and... well, there's a nontrivial chance that it'll go schizoid, and even if it doesn't, you'll usually have to actively direct it out of character to get real activity rather than just continuing in the current scene and state forever. But even if you're not much into roleplay itself (I'm finding out I don't really like synchronous rp), it's surprisingly useful to mine for conversational gimmicks or experiment with what a character could sound like.

Also legit how are the rest of you getting these AIs to do useful things?

I can't seem to get them to do much useful. I tried using ChatGPT... 3 or 4 for GM writer's block when I was trying to think of clues and it was... very very mildly helpful. Like, the thing it said wasn't that useful but it made me think of something else that was.

Stablediffusion is good for making hentai of obscure characters but it sure doesn't understand any of my OCs.

Are they really good at programming and they only seem underwhelming to me because I don't do any programming?

18 notes

·

View notes

Text

particulate physics

particulate physical energy is analyzed in temperature and pressure form and related characteristics

temperature in physics

measure of particulate motion energies in Kelvin (K, 0ºC = 273.15 K)

two entities that come into contact with one another always exchange energies until they reach the same temperature and are thus in thermal equilibrium (TE)

0th thermodynamic law: two objects both in TE with the same third object are also in TE with one another

state of an element at changing temperatures across a given pressure can be visualized via phase-shift diagram (y=pressure, x=temperature)

triple point – element exists in solid, liquid, and gas phase at once

absolute 0 K temperature indicates that gas form has 0 pressure

laws of temperature and pressure

Boyle’s Law – volume varies indirectly with pressure, V = n/P

Charles’ Law – temperature varies directly with pressure, T = nP

combined, they make up the ideal gas law PV = NkT in physics terms, where N = molecules and k = Boltzmann’s constant at 1.38e-23 J/K

or PV = nRT in physics terms, where n = mols, R = gas constant 8.314 J/(mol K), and n • R = N • k as related by Avogadro’s number 6.022e23/mol

P, V, and T are state variables, i.e., they determine both present and future state of element/entity

for particulate kinetic energy of molecular movement, KE(avg., gas) = 1.5 • kT

or KE = (3 df / 2) • kT, where gas has 3 degrees of freedom

KE for a gas can be related between (3/2) • kT and 1/2 • mv^2, to find the velocity of the gas particles

mean free time and path

mean free time (tau, τ) – average time before particle collides with another

mean free path (lambda, λ) – average distance before particle collides with another

more collisions → shorter τ and λ due to increased probability of colliding in a given amount of time

distance λ can be calculated using the state variables and the equational relation λ = 1 / (4√2 • πr^2 • (N/V)) for r = radius of spherical molecule, N = molecules, V = volume

the above applies only if all molecules in the entity are moving

thermal expansion

change in entity’s dimensions is directly proportional to change in temperature

∆L = L(0) • α • ∆T, where L = length of entity, α = material-specific coefficient of linear expansion, and T = temperature in either ºC or K

for volume, ∆V = V(0) • 3α • ∆T, where 3α = β, the volume material-specific coefficient of linear expansion, and T = temperature in either ºC or K

total ∆L or ∆V is evenly distributed over entire entity, which factors into calculations for expansion distance

heat energy

heat, Q – the energy that flows between entities of different temperatures in order to reach thermal equilibrium

∆T varies directly with Q and indirectly with mass → ∆T = Q • (1/mc), where m = unit mass and c = material-specific specific heat capacity constant

-Q = energy leaving an entity and +Q = entity gaining energy

when heat is applied to an entity, it remains in the same physical state until all the energy needed to transition to the next is gathered from the transferred heat

the period of time in which this occurs is known as the phase transition and the gathered heat is the latent heat

Q(fusion/melting) = m • L(f), where m = mass and L(f) = heat constant of fusion

Q(vaporization/condensation) = m • L(v), where L(v) = heat constant of vaporization

sublimation/deposition = jump two phase transition steps

other forms of particulate energy transfer

radiation – electromagnetic energy transfer, with power = some n • T • Area of radiating region

radiation – Stefan-Boltzmann law is Q/s = σ • T⁴ • A, where σ is the Stefan-Boltzmann constant, A = total area, and Q/s = q = heat/sec

convection – transfer of liquid/gas motion energy, warm entity → cooler areas

conduction – transfer of particulate motion energy, fast entity → slower entities and transfer rate = n • ∆T(entity 1, entity 2)

4 notes

·

View notes

Text

Best Standard Deviation Calculator

Applications of Standard Deviation

Standard deviation is extensively utilized in experimental and commercial settings to check fashions in opposition to real-global facts. An instance of this in commercial programs is fine manage for a few product. Standard deviation may be used to calculate a minimal and most fee inside which a few thing of the product ought to fall a few excessive percent of the time. In instances in which values fall outdoor the calculated variety, it is able to be important to make modifications to the manufacturing system to make certain fine manage.

https://www.standarddeviationcalculator.io/

Standard deviation is likewise utilized in climate to decide variations in local climate. Imagine towns, one at the coast and one deep inland, which have the identical suggest temperature of 75°F. While this could set off the notion that the temperatures of those towns are honestly the identical, the fact will be masked if most effective the suggest is addressed and the usual deviation ignored. Coastal towns have a tendency to have a ways extra strong temperatures because of law through big our bodies of water, considering water has a better warmth ability than land; essentially,

this makes water a ways much less liable to modifications in temperature, and coastal regions continue to be hotter in winter, and cooler in summer time season because of the quantity of power required to alternate the temperature of water. Hence, even as the coastal town can also additionally have temperature levels among 60°F and 85°F over a given time period to bring about a median of 75°F, an inland town ought to have temperatures starting from 30°F to 110°F to bring about the identical suggest

.

Another vicinity wherein preferred deviation is essentially used is finance, in which it's miles regularly used to degree the related hazard in rate fluctuations of a few asset or portfolio of assets.

The use of preferred deviation in those instances presents an estimate of the uncertainty of destiny returns on a given funding. For instance, in evaluating inventory A that has a median go back of 7% with a preferred deviation of 10% in opposition to inventory B, that has the identical common go back however a preferred deviation of 50%, the primary inventory might in reality be the more secure choice, considering preferred deviation of inventory B is considerably large, for the precise identical go back. That isn't to mention that inventory A is definitively a higher funding choice on this scenario, considering preferred deviation can skew the suggest in both direction. While Stock A has a better chance of a median go back toward 7%, Stock B can probably offer a considerably large go back (or loss).

These are just a few examples of ways one would possibly use preferred deviation, however many extra exist. Generally, calculating preferred deviation is treasured any time it's miles favored to recognise how a ways from the suggest an average fee from a distribution may be.

1 note

·

View note

Text

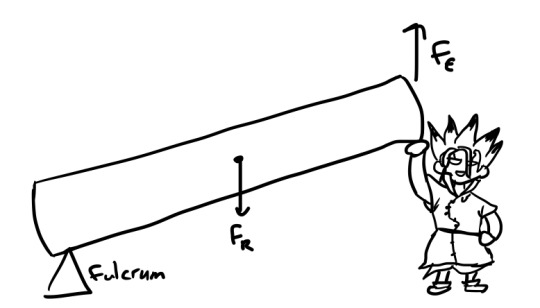

Levers

I left off on the last post with the question of how Senku based his pulley calculation on a mass of 500kg when he estimated the tree to be around 1000kg.

You could probably sort of conceptualize it as the rope and pulleys holding up half the tree’s weight and the ground holding up the other half, but how does this actually work?

The tree is a lever, another simple machine, and specifically a second class lever where the fulcrum, or pivot point, is on one end of arm (where the tree touches the ground), the load or resistance is somewhere on the middle of the arm, and the lifting force, or effort, is applied on the other end of the arm. Another real-world example of a second class lever is the wheelbarrow.

We can assume that the mass of the tree is evenly distributed along the whole tree, so the load is in the middle of the tree, where the center of gravity is.

So how is the upward force (the effort) equal to half of the downward force (the load)?

There are some equivalenty balancy things going on with torque or energy or work, since simple machines can’t create or destroy energy. Essentially, you want the product of the force of the load and the distance from the fulcrum a to be equal to the product of the force of the effort and that distance from the fulcrum b. (Torque is actually the cross product of force and radius, used for rotational things like this, so you could also say to balance the torques.)

F(r) x a = F(e) x b

F(r) is the force of the load, which is 10,000N downwards for the weight of the tree (1000kg * 10m/s/s gravity’s acceleration), and a is the distance of the load from the fulcrum, which is at the halfway point along the length of the tree.

On the other side of the equation, F(e) is the force of the effort, the upward force when Senku is pulling up on the tree with the rope and pulleys (or pushing up in my little doodle), and the distance b is the whole length of the tree, or twice the length of a.

10,000N x a = F(e) x 2a

Using some fancy algebra, you can take out the a from each side, leaving

10,000N = F(e) x 2

So the force that Senku has to exert on the other end of the tree is half of 10,000N, or 5000N, thus needing to lift 500kg of tree.

But wait! There’s more!

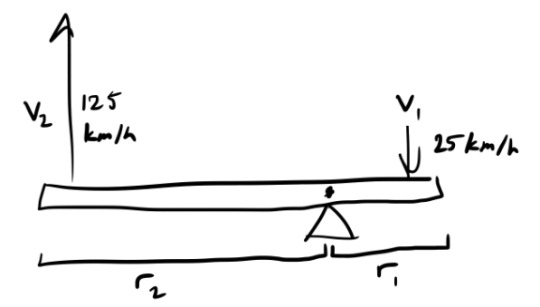

Senku uses a lever in Ch 39 as well, in his match against Ginro during the Grand Bout. This time, it’s a first class lever, where the fulcrum (Suika’s helmet) is in between the load and the effort.

The emphasis here is more on the tangential velocities of the two ends of the pole, rather than the mechanical advantage (how much force you use with the machine compared to without the machine) of the lever.

From the hyperphysics page I’ll link in the sources, “[f]or an object rotating about an axis, every point on the object has the same angular velocity.” This means that every point along the pole is rotating about the fulcrum with the same angular velocity ω, which is a measure of the angular displacement (how much of an angle something rotates around a circle) over time. Tangential velocity v is found by multiplying the angular velocity by the radius r (the distance from the fulcrum), so we get these equations:

v1 = ω * r1 v2 = ω * r2

Rearranging the equations, and keeping in mind that ω is the same in both equations, we get

v1/r1 = ω = v2/r2, or v1/r1 = v2/r2

So the tangential velocities and the radii are directly proportional. Since 125 is 5 times 25, we can tell that the length of the segment of the pole hitting Ginro, r2, is 5 times longer than the segment that Senku is stomping on, r1. It would also take Senku 5 times the force to stomp on the pole and lift the other end, but he’s putting his whole body weight into it, and anything with enough mass going 125 km/h (or 77.67 mph) straight into your crotch would probably hurt a lot regardless :D

If you have any questions on or corrections to this post, or have any suggestions for what to post about next, send me an ask!

Sources

http://www.oswego.edu/~dristle/PHY_206_powerpoints/Simple%20Machines5.14.pdf

https://en.wikipedia.org/wiki/Lever

https://en.wikipedia.org/wiki/Mechanical_advantage

https://en.wikipedia.org/wiki/Torque

http://hyperphysics.phy-astr.gsu.edu/hbase/rotq.html

Non-manga drawings by me

5 notes

·

View notes

Text

Non-radial Ejecta Morphologies of Martian Craters and their correlation with Latidudinal-Longitudinal Values